Probability Integral: Detailed Explanation, Proofs, and Derivations}

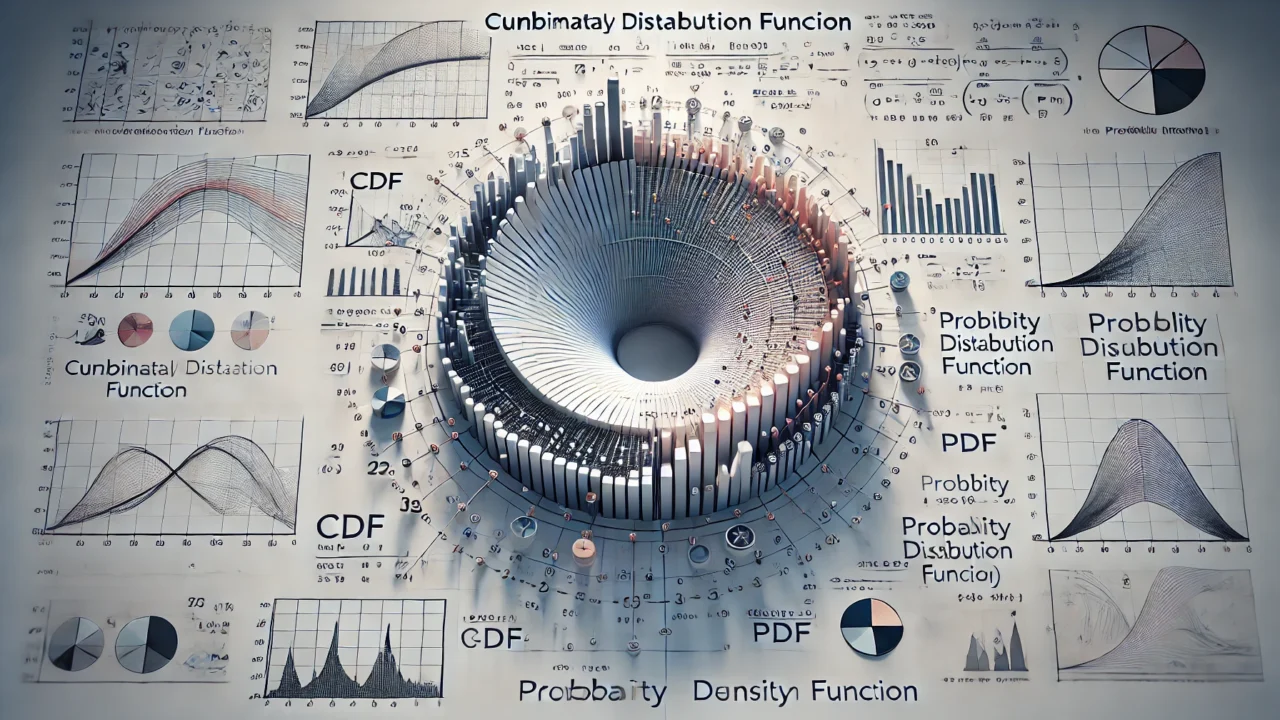

The probability integral, also known as the cumulative distribution function (CDF), is a fundamental concept in probability theory that describes the probability that a random variable takes on a value less than or equal to a certain value.

Definition of the Probability Integral

For a continuous random variable \( X \) with probability density function (PDF) \( f(x) \), the cumulative distribution function \( F(x) \) is defined as:

$$ F(x) = \mathbb{P}(X \leq x) = \int_{-\infty}^{x} f(t) \, dt $$

where \( \mathbb{P} \) denotes the probability.

Properties of the Cumulative Distribution Function

1. **Non-decreasing**: The CDF is a non-decreasing function. If \( a < b \), then \( F(a) \leq F(b) \).

2. **Limits**: The CDF has the following limits:

$$ \lim_{x \to -\infty} F(x) = 0 $$

$$ \lim_{x \to \infty} F(x) = 1 $$

3. **Continuity**: The CDF of a continuous random variable is a continuous function.

4. **Relationship with PDF**: If the PDF \( f(x) \) exists, it is the derivative of the CDF:

$$ f(x) = \frac{dF(x)}{dx} $$

Example: Normal Distribution

The normal distribution is a common probability distribution in statistics. For a normal random variable \( X \) with mean \( \mu \) and standard deviation \( \sigma \), the PDF is given by:

$$ f(x) = \frac{1}{\sqrt{2\pi\sigma^2}} e^{-\frac{(x – \mu)^2}{2\sigma^2}} $$

The CDF is:

$$ F(x) = \int_{-\infty}^{x} \frac{1}{\sqrt{2\pi\sigma^2}} e^{-\frac{(t – \mu)^2}{2\sigma^2}} \, dt $$

Derivations and Proofs

Proof that the CDF is Non-decreasing

Let \( a < b \). By definition of the CDF,

$$ F(b) = \int_{-\infty}^{b} f(t) \, dt $$

$$ F(a) = \int_{-\infty}^{a} f(t) \, dt $$

Since \( a < b \), we can write:

$$ F(b) = \int_{-\infty}^{a} f(t) \, dt + \int_{a}^{b} f(t) \, dt $$

Thus,

$$ F(b) – F(a) = \int_{a}^{b} f(t) \, dt \geq 0 $$

Therefore, \( F(b) \geq F(a) \), which shows that the CDF is non-decreasing.

Proof of the Limits of the CDF

1. For \( \lim_{x \to -\infty} F(x) \):

$$ \lim_{x \to -\infty} F(x) = \lim_{x \to -\infty} \int_{-\infty}^{x} f(t) \, dt = 0 $$

since the integral over the entire PDF must equal 1 and the integral from \(-\infty\) to a very small value will approach 0.

2. For \( \lim_{x \to \infty} F(x) \):

$$ \lim_{x \to \infty} F(x) = \lim_{x \to \infty} \int_{-\infty}^{x} f(t) \, dt = 1 $$

since the integral over the entire PDF must equal 1.

Derivation of the PDF from the CDF

If \( F(x) \) is the CDF, then by definition:

$$ F(x) = \int_{-\infty}^{x} f(t) \, dt $$

Taking the derivative with respect to \( x \),

$$ \frac{dF(x)}{dx} = f(x) $$

Thus, the PDF is the derivative of the CDF.

Expected Value and Variance

The expected value \( \mathbb{E}[X] \) and variance \( \text{Var}(X) \) of a continuous random variable \( X \) with PDF \( f(x) \) can be expressed in terms of the integral of the PDF:

$$ \mathbb{E}[X] = \int_{-\infty}^{\infty} x f(x) \, dx $$

$$ \text{Var}(X) = \mathbb{E}[X^2] – (\mathbb{E}[X])^2 $$

where

$$ \mathbb{E}[X^2] = \int_{-\infty}^{\infty} x^2 f(x) \, dx $$