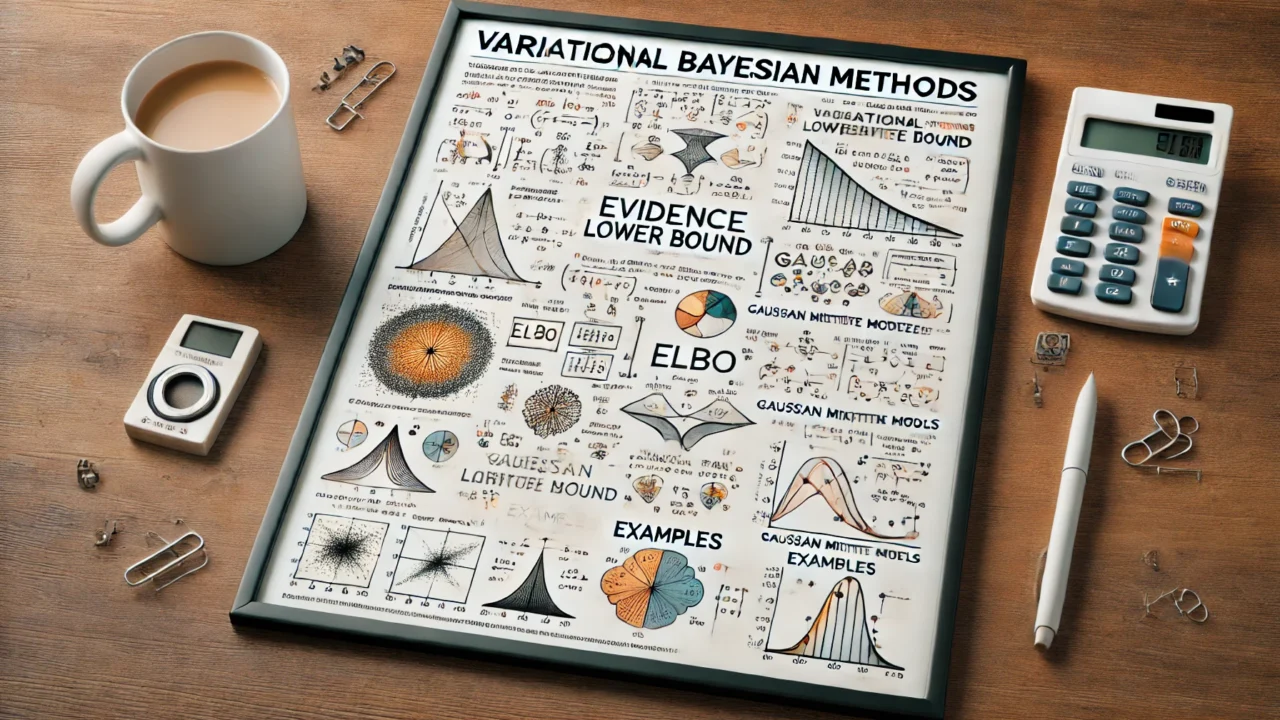

Variational Bayesian Methods: Detailed Explanation, Proofs, and Derivations

Variational Bayesian methods are used for approximating intractable integrals in Bayesian inference. These methods provide a way to approximate the posterior distribution by transforming the problem into an optimization problem.

Introduction

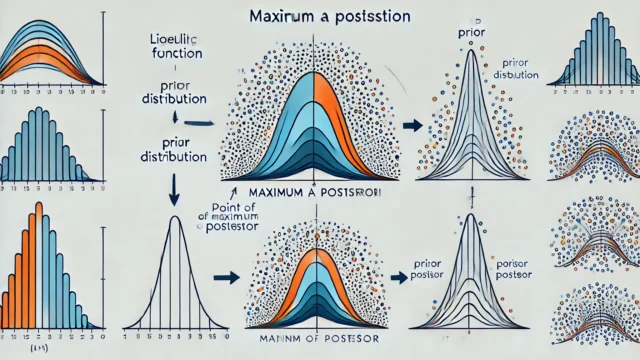

In Bayesian inference, we are often interested in the posterior distribution \( p(\theta \mid X) \), where \( \theta \) are the parameters and \( X \) is the observed data. The posterior distribution is given by Bayes’ theorem:

$$ p(\theta \mid X) = \frac{p(X \mid \theta)p(\theta)}{p(X)} $$

However, computing the posterior distribution can be intractable due to the normalization constant \( p(X) \):

$$ p(X) = \int p(X \mid \theta)p(\theta) d\theta $$

Variational Bayesian methods approximate the posterior distribution by introducing a variational distribution \( q(\theta) \) and minimizing the Kullback-Leibler (KL) divergence between \( q(\theta) \) and the true posterior \( p(\theta \mid X) \).

Variational Inference

The KL divergence between \( q(\theta) \) and \( p(\theta \mid X) \) is defined as:

$$ KL(q(\theta) \| p(\theta \mid X)) = \int q(\theta) \log \frac{q(\theta)}{p(\theta \mid X)} d\theta $$

Since the KL divergence is always non-negative, minimizing it is equivalent to maximizing the evidence lower bound (ELBO):

$$ \mathcal{L}(q) = \mathbb{E}_q[\log p(X, \theta)] – \mathbb{E}_q[\log q(\theta)] $$

The ELBO can be rewritten as:

$$ \mathcal{L}(q) = \int q(\theta) \log \frac{p(X, \theta)}{q(\theta)} d\theta $$

Maximizing the ELBO with respect to \( q(\theta) \) provides the best approximation to the true posterior.

Coordinate Ascent Variational Inference

A common approach to variational inference is coordinate ascent variational inference (CAVI), which iteratively updates each factor of the variational distribution while holding the others fixed.

Assume the variational distribution factorizes as:

$$ q(\theta) = \prod_{i=1}^d q_i(\theta_i) $$

The optimal variational distribution for each factor \( q_i(\theta_i) \) is given by:

$$ q_i(\theta_i) \propto \exp \left( \mathbb{E}_{j \neq i}[\log p(X, \theta)] \right) $$

Example: Variational Inference for Gaussian Mixture Models

Consider a Gaussian mixture model with \( K \) components. The observed data \( X = \{ x_1, x_2, \ldots, x_N \} \) is assumed to be generated from a mixture of \( K \) Gaussian distributions with parameters \( \theta = \{ \pi_k, \mu_k, \Sigma_k \}_{k=1}^K \), where \( \pi_k \) are the mixture weights, \( \mu_k \) are the means, and \( \Sigma_k \) are the covariances.

The joint distribution is given by:

$$ p(X, Z, \theta) = p(\theta) \prod_{n=1}^N p(z_n \mid \pi) p(x_n \mid z_n, \mu, \Sigma) $$

The variational distribution is assumed to factorize as:

$$ q(\theta) = q(\pi) q(\mu, \Sigma) \prod_{n=1}^N q(z_n) $$

The ELBO for the GMM is:

$$ \mathcal{L}(q) = \mathbb{E}_q[\log p(X, Z, \theta)] – \mathbb{E}_q[\log q(\theta)] $$

The updates for each variational factor are:

$$ q(z_n = k) \propto \pi_k \mathcal{N}(x_n \mid \mu_k, \Sigma_k) $$

$$ q(\pi) = \text{Dir}(\alpha + \sum_{n=1}^N q(z_n)) $$

$$ q(\mu_k, \Sigma_k) = \mathcal{N}\mathcal{W}(m_k, \beta_k, W_k, \nu_k) $$